Overview

In today’s data-driven world, it’s easy to get caught up in advanced architectures and cutting-edge tools. But before diving into complex solutions, understanding the fundamentals is critical. One of the most important distinctions in data processing is Batch vs Streaming (Real-Time). These two paradigms define how data moves, how it’s processed, and ultimately how insights are delivered.

Batch processing has been the backbone of analytics for decades, enabling organizations to process large volumes of data at scheduled intervals. Streaming, on the other hand, focuses on processing data as it arrives, delivering insights in real time. Both approaches have their place, and knowing when to use each is essential for building efficient, cost-effective, and scalable solutions.

In this article, we’ll break down what batch and streaming mean, how they work, and why understanding these basics is the foundation for any modern data strategy.

How It Works: Batch vs Streaming

Batch Processing: The Traditional Workhorse

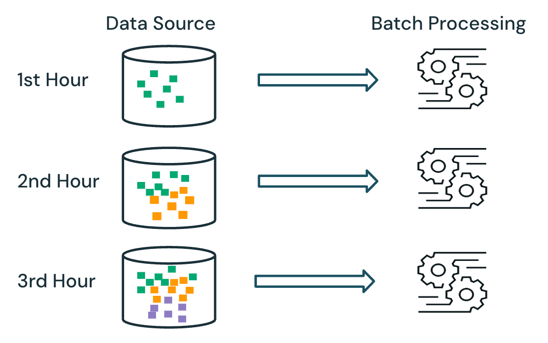

Batch processing involves collecting data over a period of time and processing it in bulk. This approach is ideal for scenarios where immediate insights aren’t required. Key characteristics include:

- Scheduled Execution: Data is processed at defined intervals (hourly, daily, weekly).

- High Throughput: Handles large volumes of data efficiently.

- Cost-Effective: Optimized for predictable workloads.

- Common Use Cases: Financial reporting, monthly billing, historical trend analysis.

Batch jobs are often simpler to implement and can leverage distributed systems for large-scale transformations. They are best suited for workloads where latency is not critical.

Streaming (Real-Time) Processing: Insights Without Delay

Streaming focuses on processing data as soon as it’s generated. Instead of waiting for a batch window, events are ingested and analyzed continuously. Key characteristics include:

- Low Latency: Data is processed in milliseconds or seconds.

- Continuous Flow: Ideal for dynamic, fast-changing environments.

- Event-Driven Architecture: React to data as it happens.

- Common Use Cases: IoT telemetry, fraud detection, real-time dashboards, operational monitoring.

Streaming pipelines often require more complex infrastructure and careful design for scalability and fault tolerance. They enable organizations to act instantly, whether it’s detecting anomalies, updating dashboards, or triggering alerts.

Why This Matters: The Benefits of Understanding the Basics

- Cost and Complexity: Streaming often requires more infrastructure and expertise. Batch can be simpler and cheaper for non-critical workloads.

- Performance: Real-time systems shine for time-sensitive insights, while batch excels at large-scale historical analysis.

- Hybrid Strategies: Many organizations use both, streaming for operational intelligence and batch for deep analytics.

- Future-Ready Decisions: Knowing the trade-offs helps you choose the right tool for the job, avoiding over-engineering or under-delivering.

Final Thoughts

Before adopting advanced platforms or architectures, start with the basics: Do you need real-time insights, or will scheduled processing suffice? This simple question can save time, money, and complexity.

Batch processing remains a cornerstone for many analytics workflows, while streaming is essential for real-time responsiveness. The best strategies often combine both, leveraging their strengths to deliver a complete data solution.

Understanding these fundamentals isn’t just academic, it’s the foundation for building scalable, efficient, and future-ready data systems.

If you have questions or want to discuss how these concepts apply to your environment, feel free to reach out to me on LinkedIn!