Overview

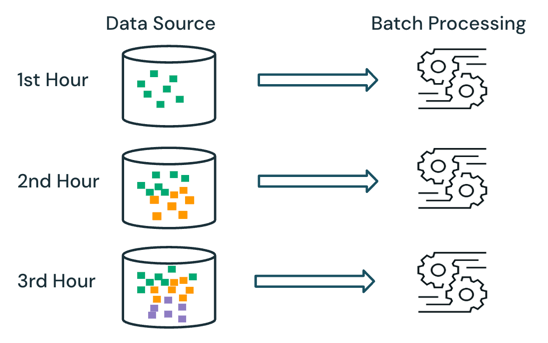

Organizations modernizing their data ecosystems now manage data flowing from cloud applications, SaaS platforms, on-premises systems, and streaming sources. Traditional ETL tools such as SQL Server Integration Services (SSIS) have long powered enterprise data warehousing and batch integration, offering a robust, code-optional environment for building data workflows. SSIS provides a Windows-based, SQL Server-centric platform for extracting, transforming, and loading data using visual design tools, built-in connectors, and highly customizable task orchestration.

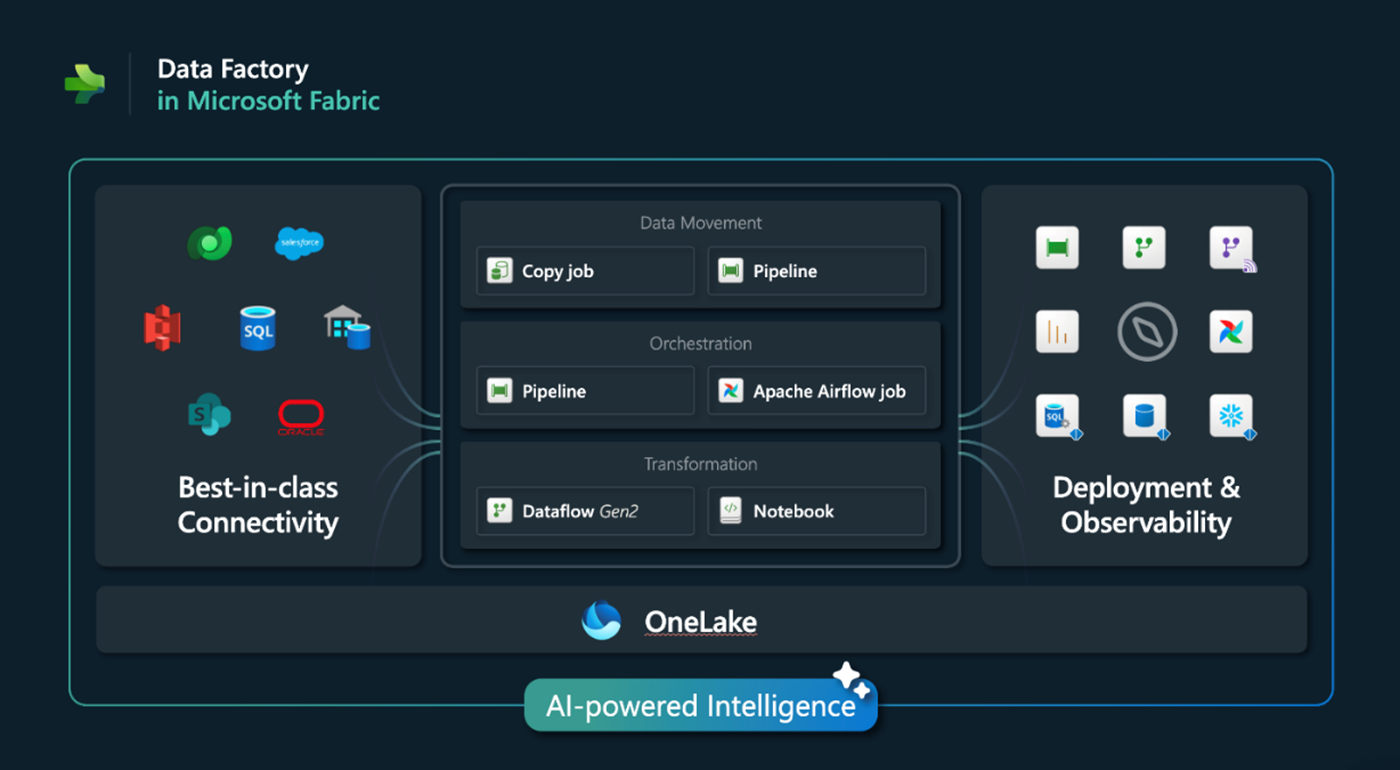

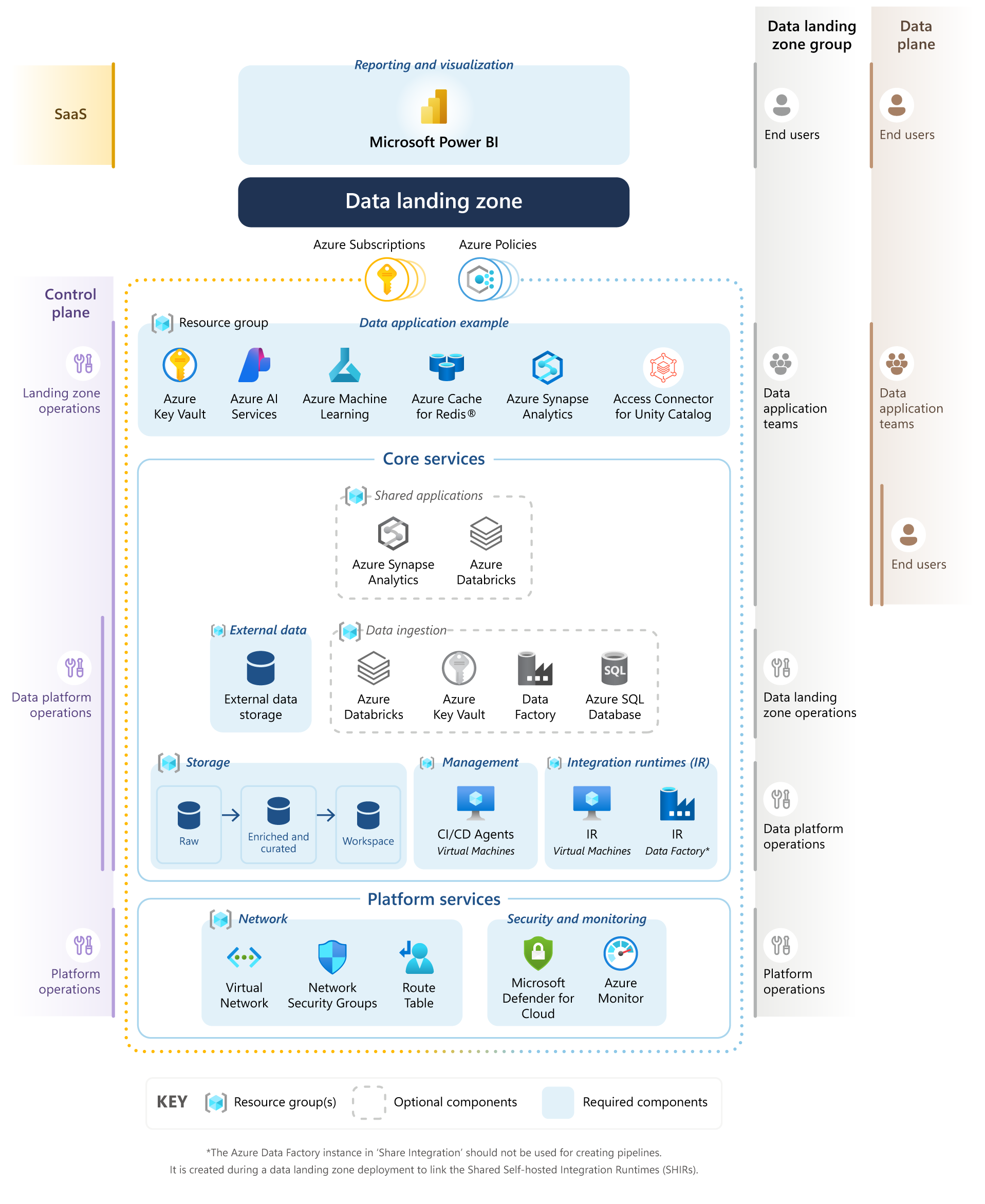

Microsoft Fabric introduces a different approach with Fabric Data Factory, a cloud-scale, fully managed data integration experience built on top of the unified Fabric platform. As the next evolution of Azure Data Factory, Fabric Data Factory offers more than 170 connectors, AI-assisted transformations, hybrid connectivity, and seamless integration with OneLake, making it suitable for modern cloud-first analytics and distributed architectures.

Rather than relying on a single on-premises integration engine, organizations now align workloads to cloud-native services that scale elastically, integrate across multicloud environments, and unify data ingestion with analytics and AI capabilities.

Key Differences Between SSIS and Fabric Data Factory

Read More