Overview

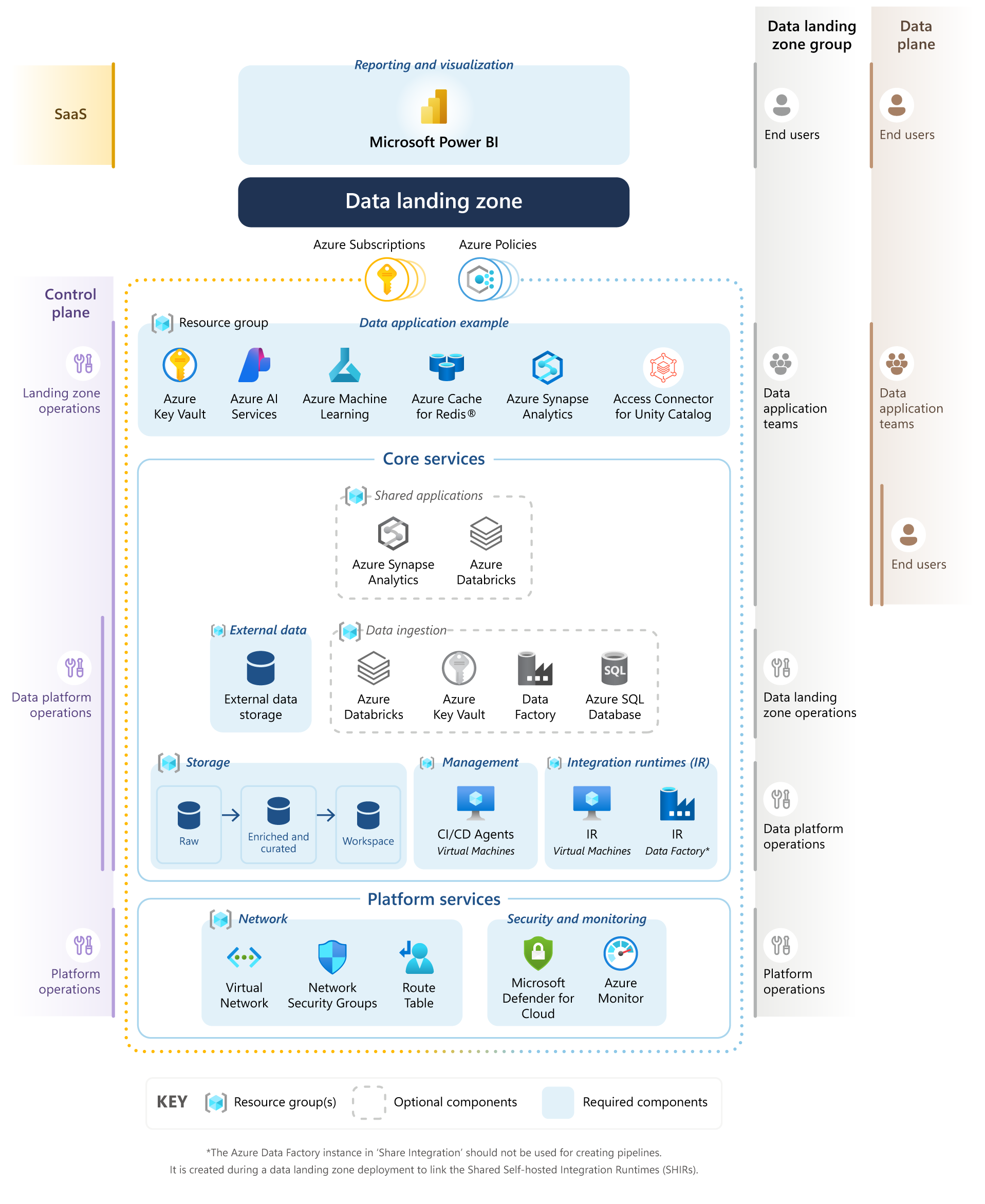

Organizations building modern analytics platforms on Azure often start with Azure Data Lake Storage Gen2 as their foundational storage layer. Azure Data Lake is highly scalable, cost effective, and flexible, making it an attractive landing zone for raw data of all types. However, without strong governance, modeling, and processing layers, many data lakes gradually devolve into what is commonly referred to as a data swamp.

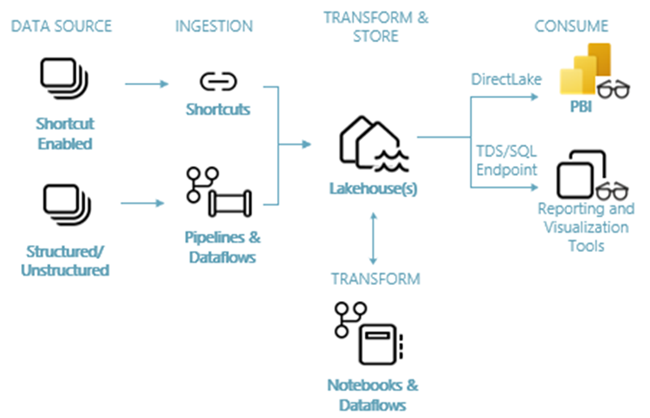

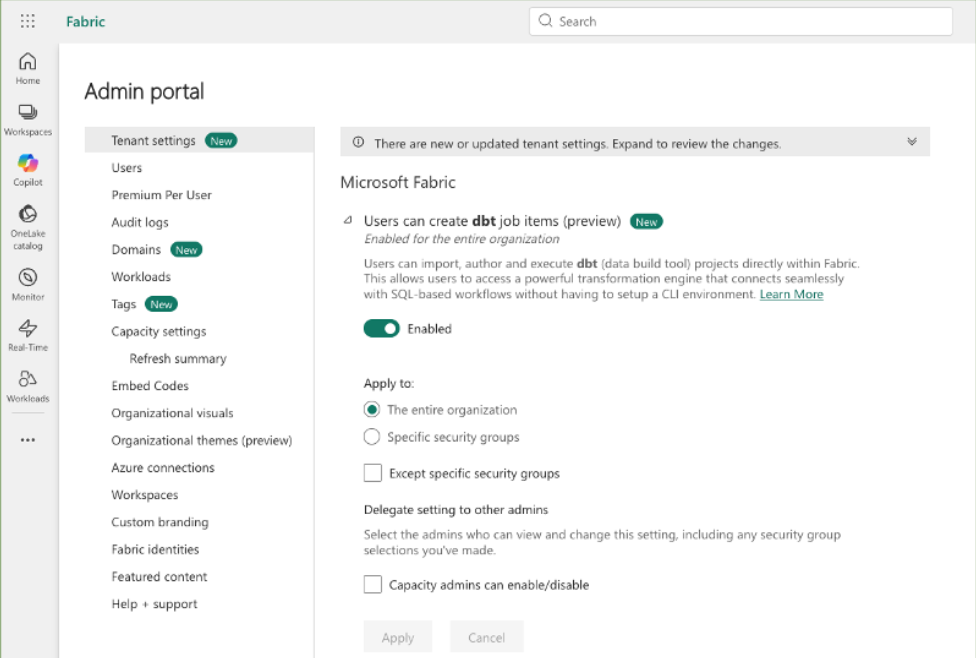

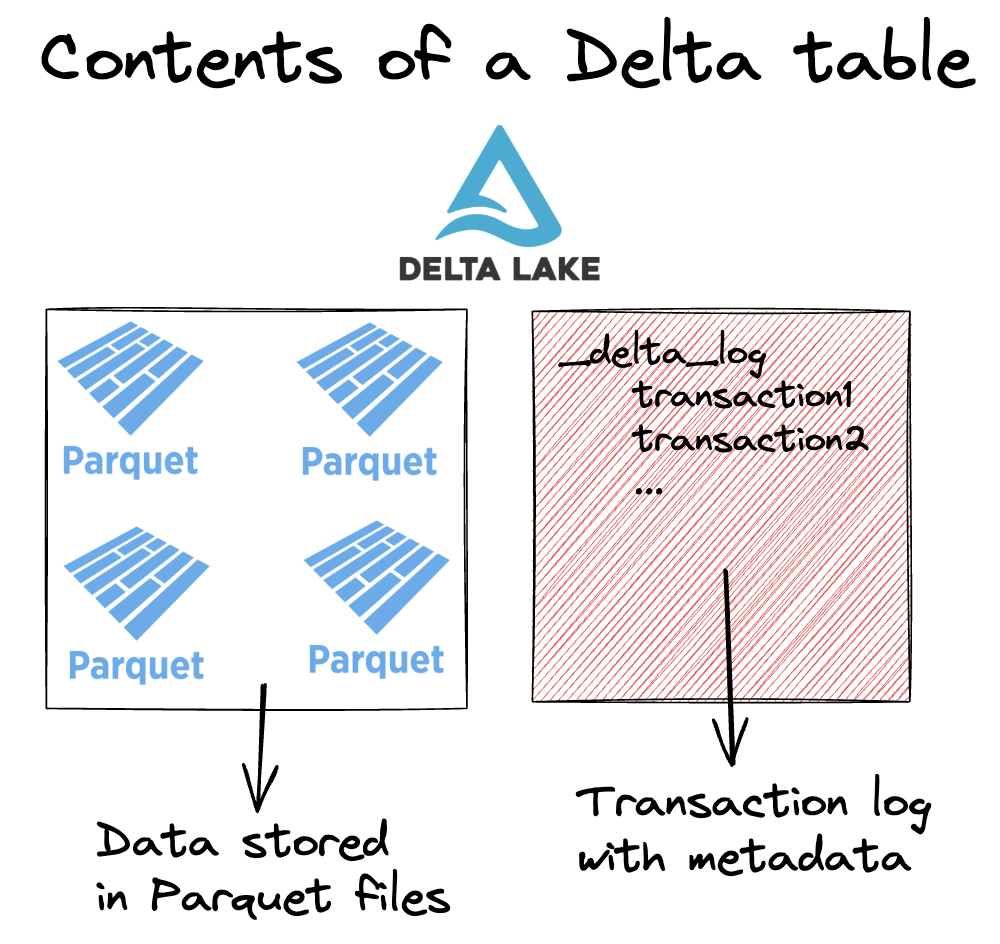

Microsoft Fabric Lakehouse was introduced to address these challenges by combining the openness of a data lake with the structure, governance, and usability of a warehouse. Unlike a traditional data lake, Fabric Lakehouse is delivered as a fully managed SaaS experience that tightly integrates storage, compute, governance, security, and analytics into a single platform.

Understanding the differences between Azure Data Lake and Fabric Lakehouse is critical when designing scalable, maintainable, and business-ready data architectures.

Azure Data Lake Storage Gen2

Read More